Celebrating Our Second Anniversary

December 2, 2023 marked the 2nd anniversary of DAIR’s launch–and the 3rd anniversary of my ouster from Google. While I can’t thank Google for the horrific way that I, my co-lead Meg Mitchell, and the rest of our team, were treated, I am thankful for the many people who organized on our behalf, pushed me to create something different, and supported me in that journey. I am very aware that without this organizing and support, I could have been one of the many women in STEM whose reputations and careers were buried after speaking up. Instead, I was given the opportunity to build an institute where more of us who can’t fit in other organizations can find a home, where we can ring the alarm on the issues we see without facing repercussions from within the organizations we’re in (although there are many from outside), and put forward our vision for a technological future that serves us.

In the last two years, I have seen how DAIR’s existence supports many others who face similar challenges to mine, whether it is techworkers retaliated against for organizing for better conditions, or PhD students facing discrimination in hostile work environments. If people from so many backgrounds are unable to have the mental space to work on technology because they have to constantly fight their environments, then how can we ever get to a technological future that actually serves people?

That’s why one of the first things we did at DAIR was to clarify our research philosophy, because how we perform research is just as important as the final product. We landed on these seven principles: community, trust and time, knowledge production, redistribution, accountability, interrogating power, and imagination.

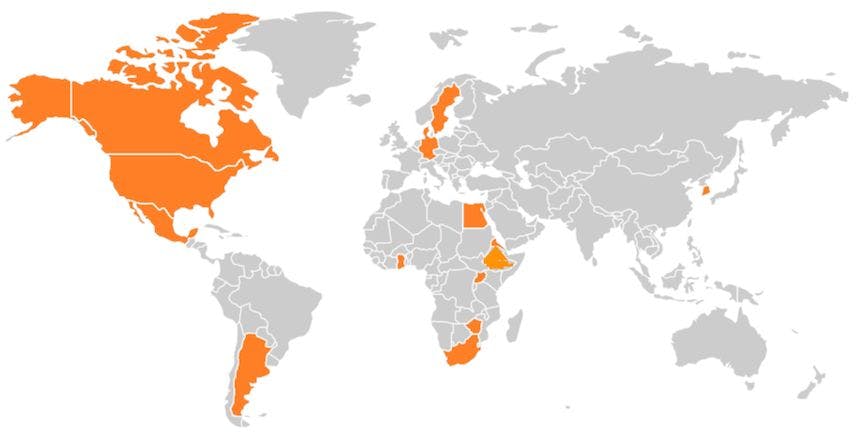

Our research principles encourage us to do work pertaining to the many communities we’re in, and avoid parachute science. Instead of contributing to a brain drain by forcing people to move to a particular location to do this research, we encourage them to stay embedded in the communities they know–the DAIR team comes from and is located in 14 countries across 3 continents. The “Distributed” in DAIR, however, not only stands for the geographical spread of our organization, but also the belief that our role is to strengthen the public interest and community-rooted technology ecosystems, and the many organizations in them.

A number of our fellows and researchers are embedded in other institutions, or have created or grown their own organizations with our support. For instance, Asmelash Teka runs the machine translation startup Lesan which was advised by some to close up shop because the Big Tech companies, which claim to have one size fits all models, supposedly render companies like his obsolete. I’m glad that Asmelash did not listen to this advice. He instead used his experience to show that organizations like his outperform the “one model for everything” approach by Big Tech companies, and raise awareness about the harms posed by such claims. Our institute doesn’t exist in a vacuum, and our success depends on a strong ecosystem of community-rooted tech organizations that can collectively strengthen alternatives to the status quo.

With your support, the last two years have helped us strengthen this ecosystem and we’re already starting to see a shift in the voices that shape AI research practices. Adrienne Williams, who was an Amazon delivery driver before joining DAIR, received the Just Tech Fellowship to study Amazon’s wage theft practices, and both Adrienne and Dylan Baker have written op-eds as part of their fellowships at the OpEd Project. In the last year, we published 15 peer-reviewed papers, op-eds, book chapters, and white papers in top-tier academic conferences, journals, multilateral organizations like the UN, and leading news outlets ranging from The Economist to Scientific American. Our papers have received best paper awards, we have organized conferences and workshops around the world, and hosted events for collective education. We’ve given more than 100 talks and appeared in the press worldwide. We even have a podcast, hosted by Alex Hanna and friend-of-the-institute Emily M. Bender, Mystery AI Hype Theater 3000, that you have downloaded 32 thousand times! It turns out that we’re not the only ones who are tired of AI Hype.

Changing the voices that influence the trajectory of AI research practices is important because research is a space where we have a chance to shape the future: Everything we see commercially is first done in the research world. Because of that, we have the opportunity to serve as an early warning system before harmful practices are proliferated. And on the flip side, we can work on alternative methods that shape how technology is developed and deployed. We were happy to see some of the most prominent conferences in the field (e.g. ACL and NeurIPS Datasets and Benchmarks Track) requiring datasets to be accompanied by documentation like datasheets. Some transparency requirements are now also part of the EU AI Act and the US Executive Order on AI.

The real harms of AI systems. In 2021, I wrote this op-ed for the Guardian outlining the need to have alternative funding for AI research that is not tied to “a continued race to figure out how to kill more people more efficiently, or make the most amount of money for a handful of corporations around the world.” Now, more than ever, we need to focus on real harms of AI, rather than imagined “existential risks.”

DAIR is part of a movement towards this goal, collaborating with workers around the world because we believe that the labor movement has a key role to play in curbing harmful AI systems. For example, our paper “AI Art and its Impact on Artists” which was written in collaboration with artists, was translated to Spanish by the art collective Arte Es Ética, and added to the Create Don’t Scrape website. There is no greater honor in my view than receiving appreciation from the community that is most impacted by one's work. Krystal Kauffman, the lead organizer of Turkopticon, a US advocacy group for data workers, is a fellow at DAIR. Together, our organizations have pushed legislators to take worker exploitation seriously. We also have collaborations with a number of workers who founded the first African Content Moderators Union, and have consulted writers, artists and other workers in their fight to curb AI systems infringing on their rights.

Our team members have been victims of Tigray Genocide, the deadliest war in the 21st century which has killed an estimated 700k-800k people (which I believe is an underestimate), while barely receiving any media coverage. So when the US-funded and enabled genocide in Gaza began, we were one of the first institutes to put out a statement on the matter, and are still one of the few tech organizations which has done so (shout out to Logic(s) Magazine for being another one). The statement was then translated to Japanese and Arabic by members of the tech community, and we also received messages from students around the world (e.g. Cape Town and Berlin) who used it in their protests. To amplify the #NoTechForApartheid movement started by Google and Amazon employees, we hosted a #NoTechForApartheid virtual event in partnership with Haymarket Books, which garnered close to 7k viewers, with speakers including Caroline Hunter who started the US tech worker-led movement to divest from apartheid South Africa. As we see more usage of AI in autonomous warfare, including the application of what has been called “a mass assassination factory” used against the people of Gaza, we have heard from many that our institute’s moral clarity has helped them speak up in a climate of retaliation. To encourage others to take a stand, we helped our peers draft and circulate this letter from the responsible AI community which was signed by over 600 people. Given that Big Tech companies are turning into military contractors, that AI is a field which was created and funded by militaristic entities, and that the field is moving more and more into autonomous warfare, we need more voices within tech to unequivocally oppose AI-enabled genocides, and support the few voices that do.

The technological future we're working towards. Like many of you, we also find it difficult to carve out time to do the slow work of imagining what type of tech future we can build, given that there are too many fires to put out at the moment. As Ruha Benjamin reminds us, “Remember to imagine and craft the worlds you cannot live without, just as you dismantle the ones you cannot live within.” (If you haven’t ordered her book yet, I suggest you do so). To that end, we are launching a Possible Futures Series in 2024, with short speculative pieces on what types of technological futures we can build if we rid ourselves of the assumption that the tech we currently have is inevitable. Dylan Baker introduces us to one piece in this 10 minute talk, and we hope to work with all of you to collectively encourage ourselves to carve out time to imagine something different.

For now, we have roughly organized our research activities into 3 buckets: 1) data for change, where we create AI systems that equip historically marginalized groups to gather evidence that improves their lives, 2) frameworks for AI research and development that start from the needs of people, and 3) alternative tech futures that put power in the hands of many different communities around the world rather than a handful of entities in Silicon Valley. We push back on claims of building one model that solves all tasks for everyone, and support a vision where many different organizations around the world create small, task specific models where the profits go to people in many different locations. For example, the people who should profit from language technology for a specific language are primarily the speakers of that language, rather than large multinational corporations that have trained models using data from those language speakers without consent or compensation.

In summary, in the last two years, with your support, we have built a distributed AI research institute which draws on its members’ experiences as researchers, engineers, activists, journalists, and gig workers. Our work has changed practices around AI development and deployment, supported alternative ecosystems to the status quo, and helped enact policy that protects marginalized groups.

Maintaining our independence. Since entering the nonprofit industrial complex, what's always on my mind is how we stay independent. I started this post by talking about refuge from retaliation, and noting how DAIR houses many people like me who can’t quite fit in other institutions. If that’s the case, then I’m confident that somewhere down the line, we will stand for something that makes someone powerful in the nonprofit industrial complex unhappy. When that happens, it won’t only be my job on the line but the job of everyone else at the institute.

But in order to hold power into account as one of our research principles says, we have to continue to raise our voices when we see harm, regardless of where it comes from. So far, we have been lucky. We have received overwhelming support from all of you, and our funders. There have been a few hit pieces from those you’d expect, but nothing major. But what if, as described in The Revolution Will Not Be Funded, someone drops us for a stance we take? We are hoping to pilot a revenue diversification strategy in the coming two years, to help us maintain our critical voice. Do you have tips for us? Please share them here. We are, as always, thankful to all of you for your support. And those of you who want to donate to our institute can do so here.